Outage post-mortem: poisonous cookie

python post-mortemTL;DR: the standard Cookie library cannot handle the malformed cookie persisted by the 3rd party javascript library due to this bug, which fails our authentication system.

Recently, the SurveyMonkey Contribute, SMC thereafter, had an outage: a group of users could not login or take survey in our site. They were bounced back and forth between the home page and login page until the browser was tired of this game and threw the towel.

Before we jump to the technical details, let me explain how the authentication works in SMC:

-

We use pyramid’s session to track the authentication: the session data is persisted to the redis, and the session cookie is set to the browser after the user successfully logins.

-

As a SurveyMonkey product, we also honor the auth cookie issued from

*.surveymonkey.comin the login page, we will automatically log the user in and create the session if she can be authenticated via the auth cookie.

It looked like the authentication by auth cookie worked as expected, but the session cookie was somehow corrupted. When the user was redirected from the login page to the home page, the home page failed to authenticate the user with the session cookie, thus it redirected the user to the login page again, — which incurred the redirect loop.

Our first instinct was the cookie was messed up in the client side as the issue only reproduced to specific users, it simply did not reproduce in my machine. But my colleague, Nate managed to reproduce the issue in the Chrome’s incognito mode and also Firefox. No.

Our second guess was the redis operation issue. No, we verified that the session data were correctly persisted to the redis.

We need to dig deeper.

Debug in a sandbox

Luckily (or unluckily?), Nate also encountered this issue in the testing environment, so we could debug this issue in a sandbox with the liberty to poke around.

First we replicated virtualenv in the testing environment, then we started a

paster instance with the same app.ini but bound to an unused port:

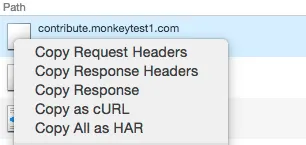

/tmp/debugging/bin/pserve /tmp/app.ini --server-name=paste httpport=8888Thanks to the Chrome Developer Tools, we can replay the request in curl: click

the Network tab in Chrome Developer Tools, and right click the request to

replay, and choose Copy as cURL from the context menu, see below:

And in another terminal, run the curl command with the overridden endpoint and

host header to hit the paster instance in our sandbox:

curl localhost:8888 -H 'Host: contribute.example.com' [... remaining options]It’s all about cookie

With the verbose DEBUG logging enabled, Nate pointed out that during the redirects, only the setter of the session was invoked, the getter was never called. How this could be possible?

After setting multiple breakpoints and poking around, the following snippet caught our attention:

if self.use_cookies:

cookieheader = request.get('cookie', '')

if secret:

try:

self.cookie = SignedCookie(secret, input=cookieheader)

except Cookie.CookieError:

self.cookie = SignedCookie(secret, input=None)

else:

self.cookie = Cookie.SimpleCookie(input=cookieheader)This piece of code is how beaker loads the session key by parsing the cookie

header, and we found that the Cookie.CookieError were always raised during the

redirects! Since SignedCookie is a thin wrapper of Cookie.BaseCookie, we can

reproduce the issue as:

>>> import Cookie

>>> Cookie.BaseCookie('''... the customer cookie ...''')

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/usr/lib/python2.7/Cookie.py", line 579, in __init__

if input: self.load(input)

File "/usr/lib/python2.7/Cookie.py", line 632, in load

self.__ParseString(rawdata)

File "/usr/lib/python2.7/Cookie.py", line 665, in __ParseString

self.__set(K, rval, cval)

File "/usr/lib/python2.7/Cookie.py", line 585, in __set

M.set(key, real_value, coded_value)

File "/usr/lib/python2.7/Cookie.py", line 460, in set

raise CookieError("Illegal key value: %s" % key)

Cookie.CookieError: Illegal key value: proxy:srcTag:yjEureka! The CookieError was raised due to the malformed key proxy:srcTag:yj

when beaker tries to load the session from the cookie; then the cookie was

discarded, which effectively ignored the session.

But why the auth cookie is recognized in the login page, should the CookieError also be raised?

Further literature research showed that the pyramid reinvented its own wheel and did NOT depend on the standard Cookie library.

Who set the cookie, why they set an invalid cookie that may get discarded?

The Google search shows that the cookie might be set by BrightTag, a tracking service provider. It is later confirmed by searching “proxy:srcTag:yj” in the source code.

The cookie name is specified in the RFC6265 as a token, which is defined in the RFC2616 as:

token = 1*<any CHAR except CTLs or separators>

separators = "(" | ")" | "<" | ">" | "@"

| "," | ";" | ":" | "\" | <">

| "/" | "[" | "]" | "?" | "="

| "{" | "}" | SP | HTThough the constraints are not enforced by many browsers according to the Wikipedia. This issue is also acknowledged by the core python developers, and fixed in python 2.7.10. Later, my colleague Junkang pointed out that the bug is also fixed in beaker 1.8.0.

Lessons learned

Progressive stack upgrade

We take a don’t-upgrade-until-it-breaks approach for our stack, which incurs technical debts over the time. We could potentially avoid this outage if we upgraded the stack more aggressively. We plan to progressively upgrade our stack routinely to strike a balance between the cutting-edge and stableness.

Audit the 3rd party javascript libraries

We integrated a handful 3rd party javascript libraries with great trust in our web site. Just as our code, the 3rd party softwares may have bugs, or oversight of the corner cases. It not only impacts our customers’ experience, but also opens another attacking surface from the security perspective. It is not trivial to audit a minified, uglified 3rd party javascript library, but at least we can:

- List all 3rd party javascript libraries we consume and their dependencies.

- Identify how they are used, and only include the libraries in the pages which require the specific functionality.

- Track the content or checksum, get notified for the new release.

Want to join us to help people make great decisions? We are hiring!